1) sudo -i -u

exp: sudo -i -u oracle

or

2) sudo -su

exp: sudo -su oracle

exp: sudo -i -u oracle

or

2) sudo -su

exp: sudo -su oracle

.

. , or the WebLogic Scripting Tool (WLST)

, or the WebLogic Scripting Tool (WLST) .

.

unzip p16199894_111170_Generic.zip2. Set Environment Variables, such as the Home to be patched and the PATH

export ORACLE_HOME=/u9000/app/oracle/product/fmw/oracle_common export PATH=$PATH:$ORACLE_HOME/OPatch which opatch3. Verify the existing patches to the Oracle Home by issuing the below command before and after patching.

opatch lsinventory4. Change the Directory to he PATCH_TOP and apply patches to the Oracle Home by issuing the below command

cd /u9000/soa_soasuite_shared/source/16199894 opatch apply

or

../../OPatch/opatch apply

Upgrade Oracle Forms and Reports JDK to match with Weblogic Server JDK#

1. Shutdown all the Service on the Server

2. Move the Existing Oracle Forms and Reports JDK to a different name

mv /u9000/app/oracle/product/fmw/Oracle_FRHome1/jdk /u9000/app/oracle/product/jdk1.7.0_25/old_java3. Copy the Weblogic JDK to the Oracle Forms and Reports Home

cp -rp /u9000/app/oracle/product/jdk1.7.0_25 /u9000/app/oracle/product/fmw/Oracle_FRHome1mv /u9000/app/oracle/product/fmw/Oracle_FRHome1/jdk1.7.0_25 /u9000/app/oracle/product/fmw/Oracle_FRHome1/jdk4. Restart the Services on the Server

Note: This tutorial uses version 1.12.0-rc2 of Docker. If you find any part of the tutorial incompatible with a future version, please raise an issue. Thanks!

$ docker run hello-world

Hello from Docker.

This message shows that your installation appears to be working correctly.

...

$ python --version

Python 2.7.11

$ pip --version

pip 7.1.2 from /Library/Python/2.7/site-packages/pip-7.1.2-py2.7.egg (python 2.7)

java -version in your terminal should give you an output similar to the one below.$ java -version

java version "1.8.0_60"

Java(TM) SE Runtime Environment (build 1.8.0_60-b27)

Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode)

docker run command.$ docker pull busybox

Note: Depending on how you've installed docker on your system, you might see apermission deniederror after running the above command. If you're on a Mac, make sure the Docker engine is running. If you're on Linux, then prefix yourdockercommands withsudo. Alternatively you can create a docker group to get rid of this issue.

pull command fetches the busybox image from the Docker registry and saves it to our system. You can use the docker images command to see a list of all images on your system.$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

busybox latest c51f86c28340 4 weeks ago 1.109 MB

docker run command.$ docker run busybox

$

run, the Docker client finds the image (busybox in this case), loads up the container and then runs a command in that container. When we run docker run busybox, we didn't provide a command, so the container booted up, ran an empty command and then exited. Well, yeah - kind of a bummer. Let's try something more exciting.$ docker run busybox echo "hello from busybox"

hello from busybox

echo command in our busybox container and then exited it. If you've noticed, all of that happened pretty quickly. Imagine booting up a virtual machine, running a command and then killing it. Now you know why they say containers are fast! Ok, now it's time to see the docker ps command. The docker ps command shows you all containers that are currently running.$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

docker ps -a$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

305297d7a235 busybox "uptime" 11 minutes ago Exited (0) 11 minutes ago distracted_goldstine

ff0a5c3750b9 busybox "sh" 12 minutes ago Exited (0) 12 minutes ago elated_ramanujan

STATUS column shows that these containers exited a few minutes ago.$ docker run -it busybox sh

/ # ls

bin dev etc home proc root sys tmp usr var

/ # uptime

05:45:21 up 5:58, 0 users, load average: 0.00, 0.01, 0.04

run command with the -it flags attaches us to an interactive tty in the container. Now we can run as many commands in the container as we want. Take some time to run your favorite commands.Danger Zone: If you're feeling particularly adventurous you can tryrm -rf binin the container. Make sure you run this command in the container and not in your laptop. Doing this will not make any other commands likels,echowork. Once everything stops working, you can exit the container and then start it up again with thedocker run -it busybox shcommand. Since Docker creates a new container every time, everything should start working again.

docker run command, which would most likely be the command you'll use most often. It makes sense to spend some time getting comfortable with it. To find out more about run, use docker run --help to see a list of all flags it supports. As we proceed further, we'll see a few more variants of docker run.docker ps -a. Throughout this tutorial, you'll run docker run multiple times and leaving stray containers will eat up disk space. Hence, as a rule of thumb, I clean up containers once I'm done with them. To do that, you can run the docker rm command. Just copy the container IDs from above and paste them alongside the command.$ docker rm 305297d7a235 ff0a5c3750b9

305297d7a235

ff0a5c3750b9

$ docker rm $(docker ps -a -q -f status=exited)

exited. In case you're wondering, the -q flag, only returns the numeric IDs and -f filters output based on conditions provided. One last thing that'll be useful is the --rm flag that can be passed to docker run which automatically deletes the container once it's exited from. For one off docker runs, --rm flag is very useful.docker rmi.docker pull command to download the busybox image.docker run which we did using the busybox image that we downloaded. A list of running containers can be seen using the docker ps command.docker run, played with a Docker container and also got a hang of some terminology. Armed with all this knowledge, we are now ready to get to the real-stuff, i.e. deploying web applications with Docker!prakhar1989/static-site. We can download and run the image directly in one go using docker run.$ docker run prakhar1989/static-site

Nginx is running... message in your terminal. Okay now that the server is running, how do see the website? What port is it running on? And more importantly, how do we access the container directly from our host machine?docker run command to publish ports. While we're at it, we should also find a way so that our terminal is not attached to the running container. This way, you can happily close your terminal and keep the container running. This is called detached mode.$ docker run -d -P --name static-site prakhar1989/static-site

e61d12292d69556eabe2a44c16cbd54486b2527e2ce4f95438e504afb7b02810

-d will detach our terminal, -P will publish all exposed ports to random ports and finally --name corresponds to a name we want to give. Now we can see the ports by running the docker port [CONTAINER] command$ docker port static-site

80/tcp -> 0.0.0.0:32769

443/tcp -> 0.0.0.0:32768

Note: If you're using docker-toolbox, then you might need to usedocker-machine ip defaultto get the IP.

$ docker run -p 8888:80 prakhar1989/static-site

Nginx is running...

docker stop by giving the container ID.docker images command.$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

prakhar1989/catnip latest c7ffb5626a50 2 hours ago 697.9 MB

prakhar1989/static-site latest b270625a1631 21 hours ago 133.9 MB

python 3-onbuild cf4002b2c383 5 days ago 688.8 MB

martin/docker-cleanup-volumes latest b42990daaca2 7 weeks ago 22.14 MB

ubuntu latest e9ae3c220b23 7 weeks ago 187.9 MB

busybox latest c51f86c28340 9 weeks ago 1.109 MB

hello-world latest 0a6ba66e537a 11 weeks ago 960 B

TAG refers to a particular snapshot of the image and the IMAGE ID is the corresponding unique identifier for that image.latest. For example, you can pull a specific version of ubuntu image$ docker pull ubuntu:12.04

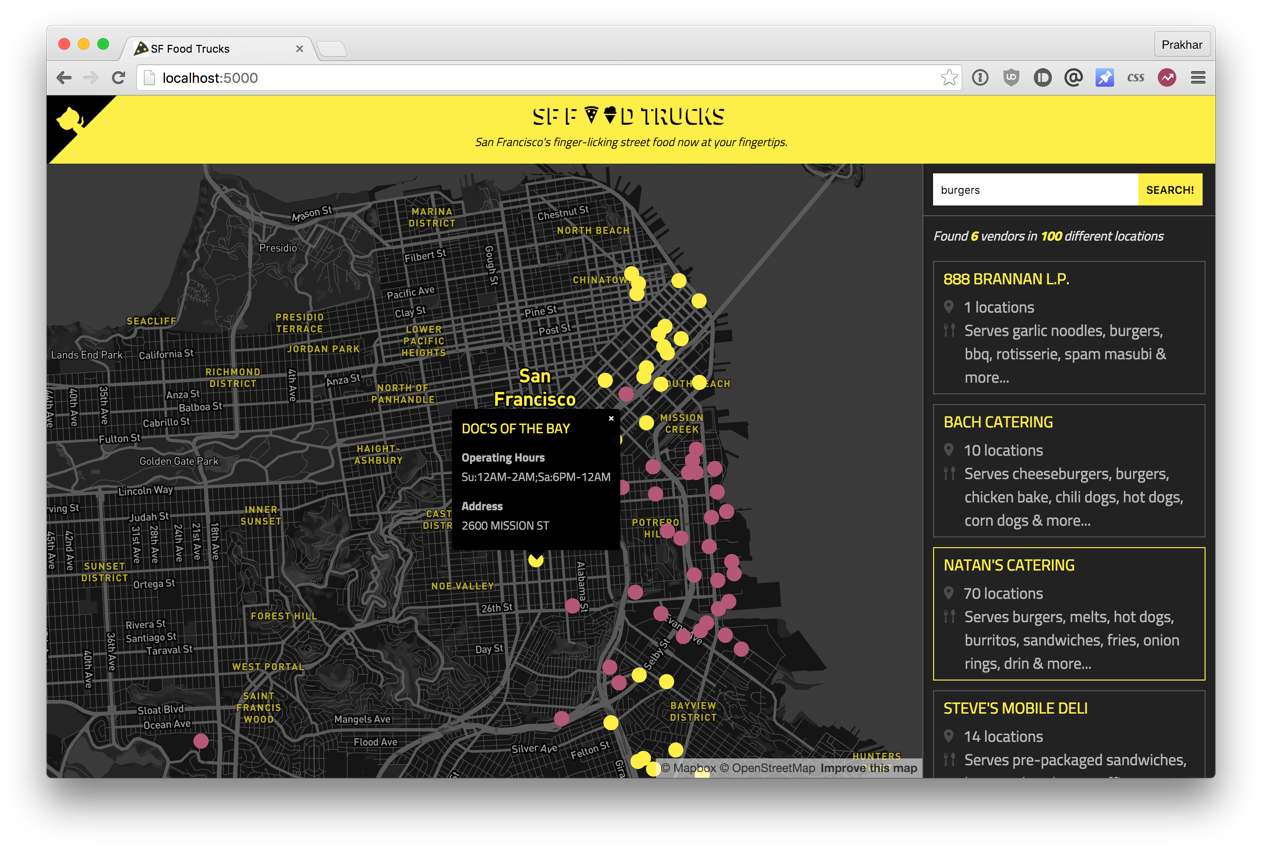

docker search.python, ubuntu, busybox and hello-worldimages are base images.user/image-name..gif every time it is loaded - because you know, who doesn't like cats? If you haven't already, please go ahead and clone the repository locally.cd into the flask-app directory and install the dependencies$ cd flask-app

$ pip install -r requirements.txt

$ python app.py

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

Note: Ifpip installis giving you permission denied errors, you might need to try running the command assudo. If you prefer not installing packages system-wide, you can instead trypip install --user -r requirements.txt.

python:3-onbuild version of the python image.onbuild version you might ask?These images include multiple ONBUILD triggers, which should be all you need to bootstrap most applications. The build will COPY arequirements.txtfile, RUNpip installon said file, and then copy the current directory into/usr/src/app.

onbuild version of the image includes helpers that automate the boring parts of getting an app running. Rather than doing these tasks manually (or scripting these tasks), these images do that work for you. We now have all the ingredients to create our own image - a functioning web app and a base image. How are we going to do that? The answer is - using a Dockerfile.Dockerfile.FROM keyword to do that -FROM python:3-onbuild

onbuild version of the image takes care of that. The next thing we need to the specify is the port number that needs to be exposed. Since our flask app is running on port 5000, that's what we'll indicate.EXPOSE 5000

python ./app.py. We use the CMD command to do that -CMD ["python", "./app.py"]

CMD is to tell the container which command it should run when it is started. With that, our Dockerfile is now ready. This is how it looks like -# our base image

FROM python:3-onbuild

# specify the port number the container should expose

EXPOSE 5000

# run the application

CMD ["python", "./app.py"]

Dockerfile, we can build our image. The docker build command does the heavy-lifting of creating a Docker image from a Dockerfile.docker build command is quite simple - it takes an optional tag name with -t and a location of the directory containing the Dockerfile.$ docker build -t prakhar1989/catnip .

Sending build context to Docker daemon 8.704 kB

Step 1 : FROM python:3-onbuild

# Executing 3 build triggers...

Step 1 : COPY requirements.txt /usr/src/app/

---> Using cache

Step 1 : RUN pip install --no-cache-dir -r requirements.txt

---> Using cache

Step 1 : COPY . /usr/src/app

---> 1d61f639ef9e

Removing intermediate container 4de6ddf5528c

Step 2 : EXPOSE 5000

---> Running in 12cfcf6d67ee

---> f423c2f179d1

Removing intermediate container 12cfcf6d67ee

Step 3 : CMD python ./app.py

---> Running in f01401a5ace9

---> 13e87ed1fbc2

Removing intermediate container f01401a5ace9

Successfully built 13e87ed1fbc2

python:3-onbuild image, the client will first pull the image and then create your image. Hence, your output from running the command will look different from mine. Look carefully and you'll notice that the on-build triggers were executed correctly. If everything went well, your image should be ready! Run docker images and see if your image shows.$ docker run -p 8888:5000 prakhar1989/catnip

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

$ docker push prakhar1989/catnip

$ docker login

Username: prakhar1989

WARNING: login credentials saved in /Users/prakhar/.docker/config.json

Login Succeeded

username/image_name so that the client knows where to publish.Note: One thing that I'd like to clarify before we go ahead is that it is not imperative to host your image on a public registry (or any registry) in order to deploy to AWS. In case you're writing code for the next million-dollar unicorn startup you can totally skip this step. The reason why we're pushing our images publicly is that it makes deployment super simple by skipping a few intermediate configuration steps.

$ docker run -p 8888:5000 prakhar1989/catnip

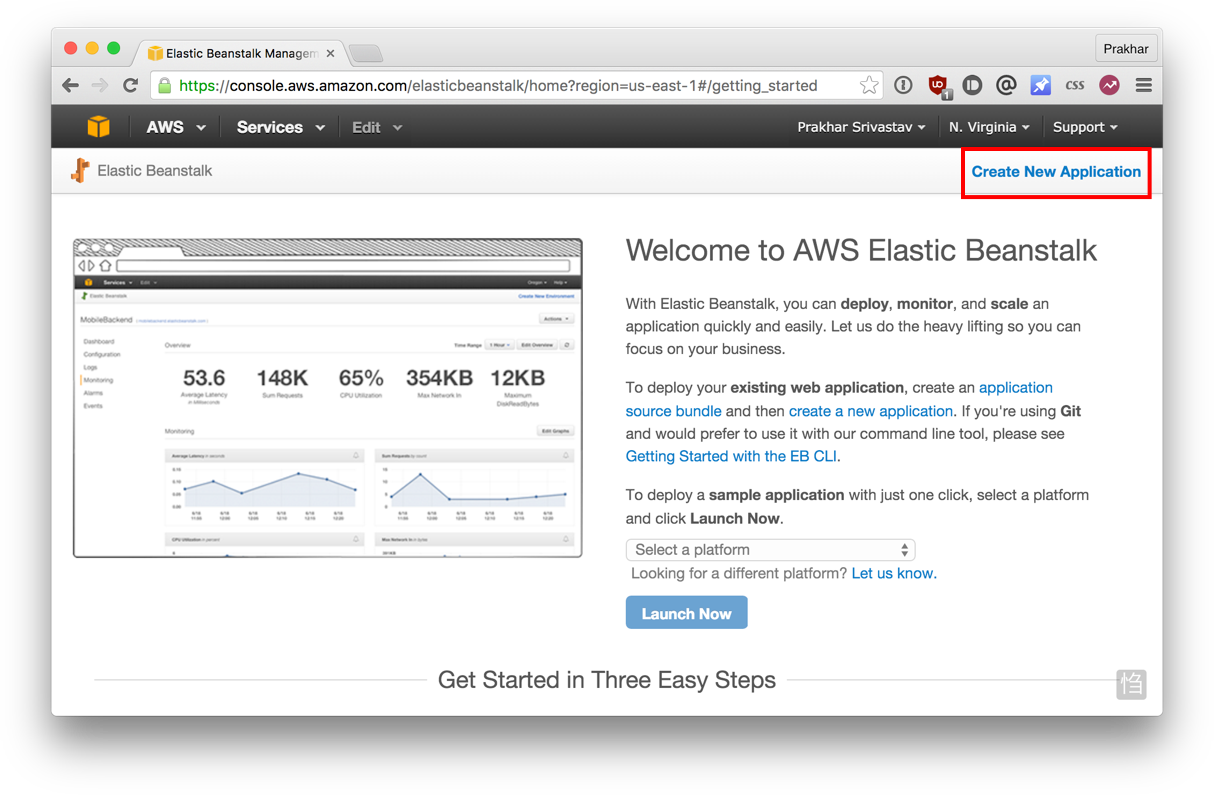

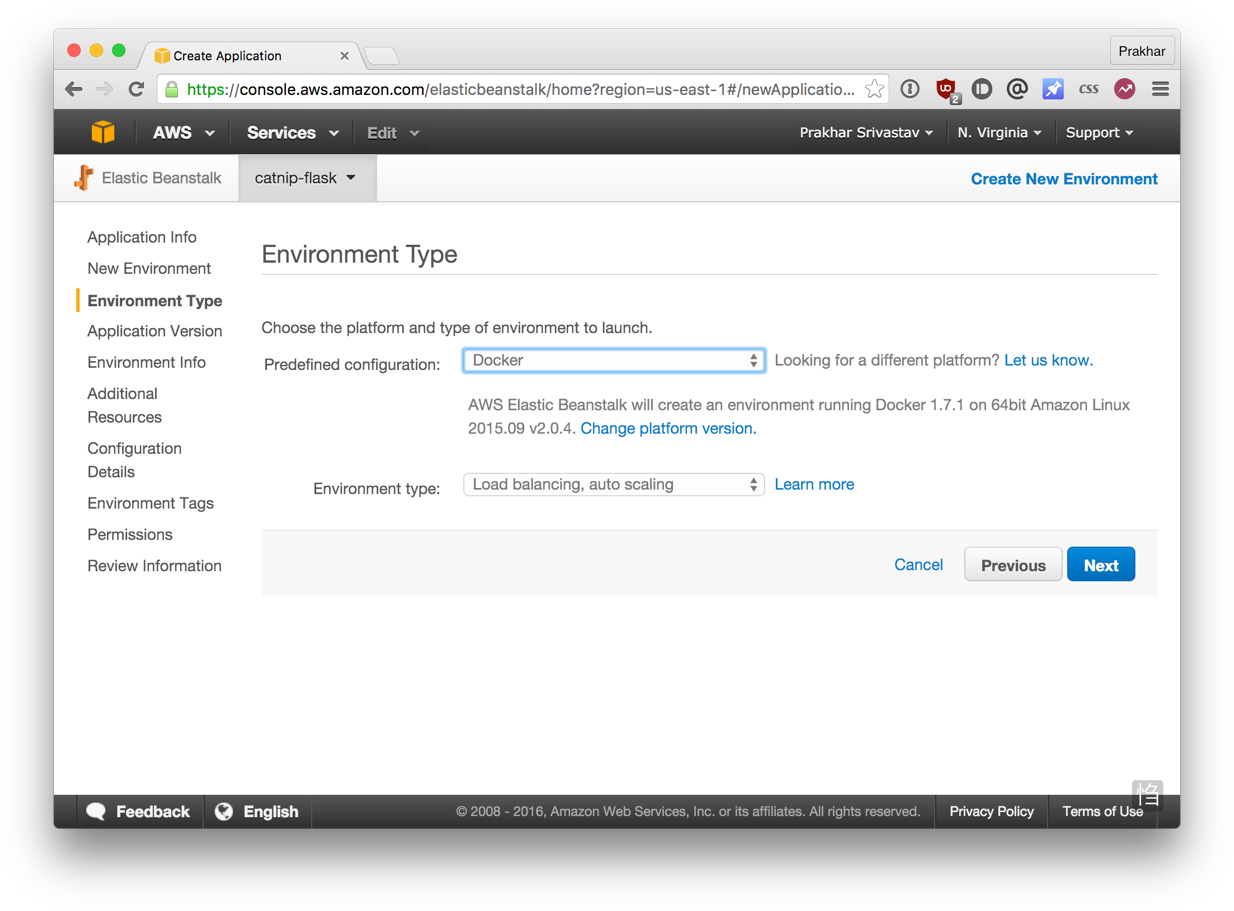

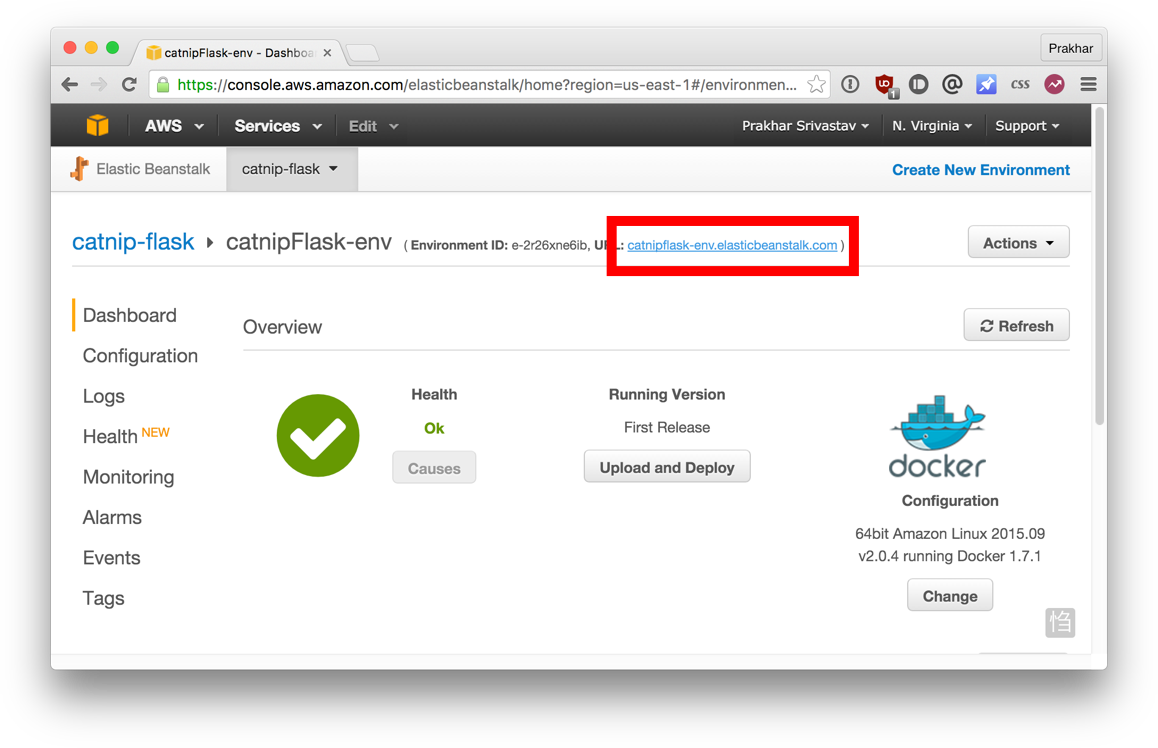

Dockerrun.aws.json file located in the flask-app folder and edit the Name of the image to your image's name. Don't worry, I'll explain the contents of the file shortly. When you are done, click on the radio button for "upload your own" and choose this file.t1.micro. This is very important as this is the free instance by AWS. You can optionally choose a key-pair to login. If you don't know what that means, feel free to ignore this for now. We'll leave everything else to the default and forge ahead.Dockerrun.aws.json file contains. This file is basically an AWS specific file that tells EB details about our application and docker configuration.{

"AWSEBDockerrunVersion": "1",

"Image": {

"Name": "prakhar1989/catnip",

"Update": "true"

},

"Ports": [

{

"ContainerPort": "5000"

}

],

"Logging": "/var/log/nginx"

}

$ docker search elasticsearch

NAME DESCRIPTION STARS OFFICIAL AUTOMATED

elasticsearch Elasticsearch is a powerful open source se... 697 [OK]

itzg/elasticsearch Provides an easily configurable Elasticsea... 17 [OK]

tutum/elasticsearch Elasticsearch image - listens in port 9200. 15 [OK]

barnybug/elasticsearch Latest Elasticsearch 1.7.2 and previous re... 15 [OK]

digitalwonderland/elasticsearch Latest Elasticsearch with Marvel & Kibana 12 [OK]

monsantoco/elasticsearch ElasticSearch Docker image 9 [OK]

docker run and have a single-node ES container running locally within no time.$ docker run -dp 9200:9200 elasticsearch

d582e031a005f41eea704cdc6b21e62e7a8a42021297ce7ce123b945ae3d3763

$ curl 0.0.0.0:9200

{

"name" : "Ultra-Marine",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "2.1.1",

"build_hash" : "40e2c53a6b6c2972b3d13846e450e66f4375bd71",

"build_timestamp" : "2015-12-15T13:05:55Z",

"build_snapshot" : false,

"lucene_version" : "5.3.1"

},

"tagline" : "You Know, for Search"

}

Dockerfile. In the last section, we used python:3-onbuild image as our base image. This time, however, apart from installing Python dependencies via pip, we want our application to also generate our minified Javascript file for production. For this, we'll require Nodejs. Since we need a custom build step, we'll start from the ubuntu base image to build our Dockerfile from scratch.Note: if you find that an existing image doesn't cater to your needs, feel free to start from another base image and tweak it yourself. For most of the images on Docker Hub, you should be able to find the correspondingDockerfileon Github. Reading through existing Dockerfiles is one of the best ways to learn how to roll your own.

# start from base

FROM ubuntu:14.04

MAINTAINER Prakhar Srivastav

# install system-wide deps for python and node

RUN apt-get -yqq update

RUN apt-get -yqq install python-pip python-dev

RUN apt-get -yqq install nodejs npm

RUN ln -s /usr/bin/nodejs /usr/bin/node

# copy our application code

ADD flask-app /opt/flask-app

WORKDIR /opt/flask-app

# fetch app specific deps

RUN npm install

RUN npm run build

RUN pip install -r requirements.txt

# expose port

EXPOSE 5000

# start app

CMD [ "python", "./app.py" ]

apt-get to install the dependencies namely - Python and Node. The yqq flag is used to suppress output and assumes "Yes" to all prompt. We also create a symbolic link for the node binary to deal with backward compatibility issues.ADD command to copy our application into a new volume in the container - /opt/flask-app. This is where our code will reside. We also set this as our working directory, so that the following commands will be run in the context of this location. Now that our system-wide dependencies are installed, we get around to install app-specific ones. First off we tackle Node by installing the packages from npm and running the build command as defined in our package.json file. We finish the file off by installing the Python packages, exposing the port and defining the CMD to run as we did in the last section.prakhar1989 with your username below).$ docker build -t prakhar1989/foodtrucks-web .

docker build after any subsequent changes you make to the application code will almost be instantaneous. Now let's try running our app.$ docker run -P prakhar1989/foodtrucks-web

Unable to connect to ES. Retying in 5 secs...

Unable to connect to ES. Retying in 5 secs...

Unable to connect to ES. Retying in 5 secs...

Out of retries. Bailing out...

docker ps and see what we have.$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e931ab24dedc elasticsearch "/docker-entrypoint.s" 2 seconds ago Up 2 seconds 0.0.0.0:9200->9200/tcp, 9300/tcp cocky_spence

0.0.0.0:9200 port which we can directly access. If we can tell our Flask app to connect to this URL, it should be able to connect and talk to ES, right? Let's dig into our Python code and see how the connection details are defined.es = Elasticsearch(host='es')

0.0.0.0 host (the port by default is 9200) and that should make it work, right? Unfortunately that is not correct since the IP 0.0.0.0 is the IP to access ES container from the host machine i.e. from my Mac. Another container will not be able to access this on the same IP address. Okay if not that IP, then which IP address should the ES container be accessible by? I'm glad you asked this question.$ docker network ls

NETWORK ID NAME DRIVER

075b9f628ccc none null

be0f7178486c host host

8022115322ec bridge bridge

$ docker network inspect bridge

[

{

"Name": "bridge",

"Id": "8022115322ec80613421b0282e7ee158ec41e16f565a3e86fa53496105deb2d7",

"Scope": "local",

"Driver": "bridge",

"IPAM": {

"Driver": "default",

"Config": [

{

"Subnet": "172.17.0.0/16"

}

]

},

"Containers": {

"e931ab24dedc1640cddf6286d08f115a83897c88223058305460d7bd793c1947": {

"EndpointID": "66965e83bf7171daeb8652b39590b1f8c23d066ded16522daeb0128c9c25c189",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

}

}

]

e931ab24dedc is listed under the Containers section in the output. What we also see is the IP address this container has been allotted - 172.17.0.2. Is this the IP address that we're looking for? Let's find out by running our flask container and trying to access this IP.$ docker run -it --rm prakhar1989/foodtrucks-web bash

root@35180ccc206a:/opt/flask-app# curl 172.17.0.2:9200

bash: curl: command not found

root@35180ccc206a:/opt/flask-app# apt-get -yqq install curl

root@35180ccc206a:/opt/flask-app# curl 172.17.0.2:9200

{

"name" : "Jane Foster",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "2.1.1",

"build_hash" : "40e2c53a6b6c2972b3d13846e450e66f4375bd71",

"build_timestamp" : "2015-12-15T13:05:55Z",

"build_snapshot" : false,

"lucene_version" : "5.3.1"

},

"tagline" : "You Know, for Search"

}

root@35180ccc206a:/opt/flask-app# exit

bash process. The --rm is a convenient flag for running one off commands since the container gets cleaned up when it's work is done. We try a curl but we need to install it first. Once we do that, we see that we can indeed talk to ES on 172.17.0.2:9200. Awesome!/etc/hosts file of the Flask container so that it knows that es hostname stands for 172.17.0.2. If the IP keeps changing, manually editing this entry would be quite tedious./etc/hosts problem and we'll quickly see how.$ docker network create foodtrucks

1a3386375797001999732cb4c4e97b88172d983b08cd0addfcb161eed0c18d89

$ docker network ls

NETWORK ID NAME DRIVER

1a3386375797 foodtrucks bridge

8022115322ec bridge bridge

075b9f628ccc none null

be0f7178486c host host

network create command creates a new bridge network, which is what we need at the moment. There are other kinds of networks that you can create, and you are encouraged to read about them in the official docs.--net flag. Let's do that - but first, we will stop our ES container that is running in the bridge (default) network.$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e931ab24dedc elasticsearch "/docker-entrypoint.s" 4 hours ago Up 4 hours 0.0.0.0:9200->9200/tcp, 9300/tcp cocky_spence

$ docker stop e931ab24dedc

e931ab24dedc

$ docker run -dp 9200:9200 --net foodtrucks --name es elasticsearch

2c0b96f9b8030f038e40abea44c2d17b0a8edda1354a08166c33e6d351d0c651

$ docker network inspect foodtrucks

[

{

"Name": "foodtrucks",

"Id": "1a3386375797001999732cb4c4e97b88172d983b08cd0addfcb161eed0c18d89",

"Scope": "local",

"Driver": "bridge",

"IPAM": {

"Driver": "default",

"Config": [

{}

]

},

"Containers": {

"2c0b96f9b8030f038e40abea44c2d17b0a8edda1354a08166c33e6d351d0c651": {

"EndpointID": "15eabc7989ef78952fb577d0013243dae5199e8f5c55f1661606077d5b78e72a",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {}

}

]

es. Now before we try to run our flask container, let's inspect what happens when we launch in a network.$ docker run -it --rm --net foodtrucks prakhar1989/foodtrucks-web bash

root@53af252b771a:/opt/flask-app# cat /etc/hosts

172.18.0.3 53af252b771a

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.18.0.2 es

172.18.0.2 es.foodtrucks

root@53af252b771a:/opt/flask-app# curl es:9200

bash: curl: command not found

root@53af252b771a:/opt/flask-app# apt-get -yqq install curl

root@53af252b771a:/opt/flask-app# curl es:9200

{

"name" : "Doctor Leery",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "2.1.1",

"build_hash" : "40e2c53a6b6c2972b3d13846e450e66f4375bd71",

"build_timestamp" : "2015-12-15T13:05:55Z",

"build_snapshot" : false,

"lucene_version" : "5.3.1"

},

"tagline" : "You Know, for Search"

}

root@53af252b771a:/opt/flask-app# ls

app.py node_modules package.json requirements.txt static templates webpack.config.js

root@53af252b771a:/opt/flask-app# python app.py

Index not found...

Loading data in elasticsearch ...

Total trucks loaded: 733

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

root@53af252b771a:/opt/flask-app# exit

/etc/hosts which means that es:9200 correctly resolves to the IP address of the ES container. Great! Let's launch our Flask container for real now -$ docker run -d --net foodtrucks -p 5000:5000 --name foodtrucks-web prakhar1989/foodtrucks-web

2a1b77e066e646686f669bab4759ec1611db359362a031667cacbe45c3ddb413

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2a1b77e066e6 prakhar1989/foodtrucks-web "python ./app.py" 2 seconds ago Up 1 seconds 0.0.0.0:5000->5000/tcp foodtrucks-web

2c0b96f9b803 elasticsearch "/docker-entrypoint.s" 21 minutes ago Up 21 minutes 0.0.0.0:9200->9200/tcp, 9300/tcp es

$ curl -I 0.0.0.0:5000

HTTP/1.0 200 OK

Content-Type: text/html; charset=utf-8

Content-Length: 3697

Server: Werkzeug/0.11.2 Python/2.7.6

Date: Sun, 10 Jan 2016 23:58:53 GMT

#!/bin/bash

# build the flask container

docker build -t prakhar1989/foodtrucks-web .

# create the network

docker network create foodtrucks

# start the ES container

docker run -d --net foodtrucks -p 9200:9200 -p 9300:9300 --name es elasticsearch

# start the flask app container

docker run -d --net foodtrucks -p 5000:5000 --name foodtrucks-web prakhar1989/foodtrucks-web

$ git clone https://github.com/prakhar1989/FoodTrucks

$ cd FoodTrucks

$ ./setup-docker.sh

docker network is a relatively new feature - it was part of Docker 1.9 release. Before network came along, links were the accepted way of getting containers to talk to each other. According to the official docs, linking is expected to be deprecated in future releases. In case you stumble across tutorials or blog posts that use link to bridge containers, remember to use network instead.So really at this point, that's what Docker is about: running processes. Now Docker offers a quite rich API to run the processes: shared volumes (directories) between containers (i.e. running images), forward port from the host to the container, display logs, and so on. But that's it: Docker as of now, remains at the process level.While it provides options to orchestrate multiple containers to create a single "app", it doesn't address the managemement of such group of containers as a single entity. And that's where tools such as Fig come in: talking about a group of containers as a single entity. Think "run an app" (i.e. "run an orchestrated cluster of containers") instead of "run a container".

docker-compose.yml that can be used to bring up an application and the suite of services it depends on with just one command.docker-compose.yml file for our SF-Foodtrucks app and evaluate whether Docker Compose lives up to its promise.pip install docker-compose. Test your installation with -$ docker-compose version

docker-compose version 1.7.1, build 0a9ab35

docker-py version: 1.8.1

CPython version: 2.7.9

OpenSSL version: OpenSSL 1.0.1j 15 Oct 2014

docker-compose.yml. The syntax for the yml is quite simple and the repo already contains the docker-compose filethat we'll be using.version: "2"

services:

es:

image: elasticsearch

web:

image: prakhar1989/foodtrucks-web

command: python app.py

ports:

- "5000:5000"

volumes:

- .:/code

esand web. For each service, that Docker needs to run, we can add additional parameters out of which imageis required. For es, we just refer to the elasticsearch image available on the Docker Hub. For our Flask app, we refer to the image that we built at the beginning of this section.command and ports we provide more information about the container. The volumes parameter specifies a mount point in our web container where the code will reside. This is purely optional and is useful if you need access to logs etc. Refer to the online reference to learn more about the parameters this file supports.Note: You must be inside the directory with thedocker-compose.ymlfile in order to execute most Compose commands.

docker-compose in action. But before we start, we need to make sure the ports are free. So if you have the Flask and ES containers running, lets turn them off.$ docker stop $(docker ps -q)

39a2f5df14ef

2a1b77e066e6

docker-compose. Navigate to the food trucks directory and run docker-compose up.$ docker-compose up

Creating network "foodtrucks_default" with the default driver

Creating foodtrucks_es_1

Creating foodtrucks_web_1

Attaching to foodtrucks_es_1, foodtrucks_web_1

es_1 | [2016-01-11 03:43:50,300][INFO ][node ] [Comet] version[2.1.1], pid[1], build[40e2c53/2015-12-15T13:05:55Z]

es_1 | [2016-01-11 03:43:50,307][INFO ][node ] [Comet] initializing ...

es_1 | [2016-01-11 03:43:50,366][INFO ][plugins ] [Comet] loaded [], sites []

es_1 | [2016-01-11 03:43:50,421][INFO ][env ] [Comet] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/sda1)]], net usable_space [16gb], net total_space [18.1gb], spins? [possibly], types [ext4]

es_1 | [2016-01-11 03:43:52,626][INFO ][node ] [Comet] initialized

es_1 | [2016-01-11 03:43:52,632][INFO ][node ] [Comet] starting ...

es_1 | [2016-01-11 03:43:52,703][WARN ][common.network ] [Comet] publish address: {0.0.0.0} is a wildcard address, falling back to first non-loopback: {172.17.0.2}

es_1 | [2016-01-11 03:43:52,704][INFO ][transport ] [Comet] publish_address {172.17.0.2:9300}, bound_addresses {[::]:9300}

es_1 | [2016-01-11 03:43:52,721][INFO ][discovery ] [Comet] elasticsearch/cEk4s7pdQ-evRc9MqS2wqw

es_1 | [2016-01-11 03:43:55,785][INFO ][cluster.service ] [Comet] new_master {Comet}{cEk4s7pdQ-evRc9MqS2wqw}{172.17.0.2}{172.17.0.2:9300}, reason: zen-disco-join(elected_as_master, [0] joins received)

es_1 | [2016-01-11 03:43:55,818][WARN ][common.network ] [Comet] publish address: {0.0.0.0} is a wildcard address, falling back to first non-loopback: {172.17.0.2}

es_1 | [2016-01-11 03:43:55,819][INFO ][http ] [Comet] publish_address {172.17.0.2:9200}, bound_addresses {[::]:9200}

es_1 | [2016-01-11 03:43:55,819][INFO ][node ] [Comet] started

es_1 | [2016-01-11 03:43:55,826][INFO ][gateway ] [Comet] recovered [0] indices into cluster_state

es_1 | [2016-01-11 03:44:01,825][INFO ][cluster.metadata ] [Comet] [sfdata] creating index, cause [auto(index api)], templates [], shards [5]/[1], mappings [truck]

es_1 | [2016-01-11 03:44:02,373][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:02,510][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:02,593][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:02,708][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

es_1 | [2016-01-11 03:44:03,047][INFO ][cluster.metadata ] [Comet] [sfdata] update_mapping [truck]

web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

Killing foodtrucks_web_1 ... done

Killing foodtrucks_es_1 ... done

$ docker-compose up -d

Starting foodtrucks_es_1

Starting foodtrucks_web_1

$ docker-compose ps

Name Command State Ports

----------------------------------------------------------------------------------

foodtrucks_es_1 /docker-entrypoint.sh elas ... Up 9200/tcp, 9300/tcp

foodtrucks_web_1 python app.py Up 0.0.0.0:5000->5000/tcp

$ docker-compose stop

Stopping foodtrucks_web_1 ... done

Stopping foodtrucks_es_1 ... done

foodtrucks network that we created last time. This should not be required since Compose would automatically manage this for us.$ docker network rm foodtrucks

$ docker network ls

NETWORK ID NAME DRIVER

4eec273c054e bridge bridge

9347ae8783bd none null

54df57d7f493 host host

$ docker-compose up -d

Recreating foodtrucks_es_1

Recreating foodtrucks_web_1

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f50bb33a3242 prakhar1989/foodtrucks-web "python app.py" 14 seconds ago Up 13 seconds 0.0.0.0:5000->5000/tcp foodtrucks_web_1

e299ceeb4caa elasticsearch "/docker-entrypoint.s" 14 seconds ago Up 14 seconds 9200/tcp, 9300/tcp foodtrucks_es_1

$ docker network ls

NETWORK ID NAME DRIVER

0c8b474a9241 bridge bridge

293a141faac3 foodtrucks_default bridge

b44db703cd69 host host

0474c9517805 none null

foodtrucks_default and attached both the new services in that network so that each of these are discoverable to the other. Each container for a service joins the default network and is both reachable by other containers on that network, and discoverable by them at a hostname identical to the container name. Let's see if that information resides in /etc/hosts.$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bb72dcebd379 prakhar1989/foodtrucks-web "python app.py" 20 hours ago Up 19 hours 0.0.0.0:5000->5000/tcp foodtrucks_web_1

3338fc79be4b elasticsearch "/docker-entrypoint.s" 20 hours ago Up 19 hours 9200/tcp, 9300/tcp foodtrucks_es_1

$ docker exec -it bb72dcebd379 bash

root@bb72dcebd379:/opt/flask-app# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.18.0.2 bb72dcebd379

es network. So how is our app working? Let's see if can ping this hostname -root@bb72dcebd379:/opt/flask-app# ping es

PING es (172.18.0.3) 56(84) bytes of data.

64 bytes from foodtrucks_es_1.foodtrucks_default (172.18.0.3): icmp_seq=1 ttl=64 time=0.049 ms

64 bytes from foodtrucks_es_1.foodtrucks_default (172.18.0.3): icmp_seq=2 ttl=64 time=0.064 ms

^C

--- es ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.049/0.056/0.064/0.010 ms

es hostname. It turns out that in Docker 1.10 a new networking system was added that does service discovery using a DNS server. If you're interested, you can read more about the proposal and release notes.docker-compose to run our app locally with a single command: docker-compose up. Now that we have a functioning app we want to share this with the world, get some users, make tons of money and buy a big house in Miami. Executing the last three are beyond the scope of tutorial, so we'll spend our time instead on figuring out how we can deploy our multi-container apps on the cloud with AWS.docker-compose.yml it should not take a lot of effort in getting up and running on AWS. So let's get started!$ ecs-cli --version

ecs-cli version 0.1.0 (*cbdc2d5)

ecs and set my region as us-east-1. This is what I'll assume for the rest of this walkthrough.

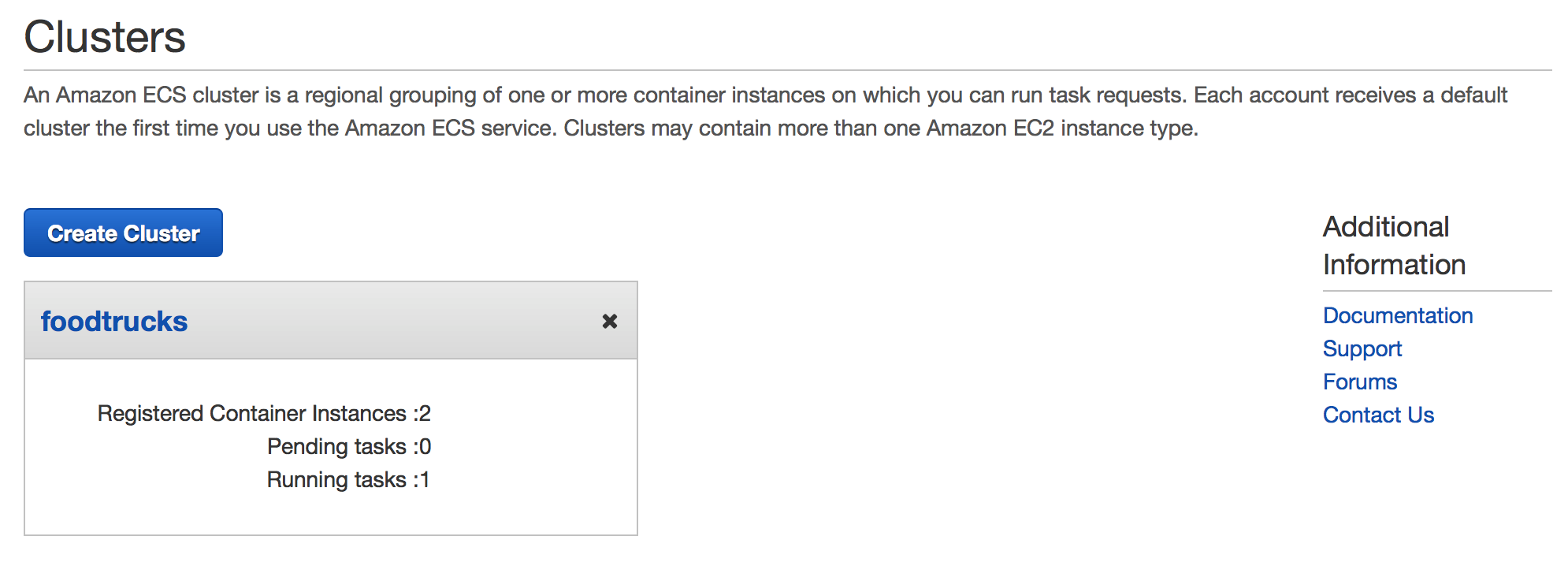

$ ecs-cli configure --region us-east-1 --cluster foodtrucks

INFO[0000] Saved ECS CLI configuration for cluster (foodtrucks)

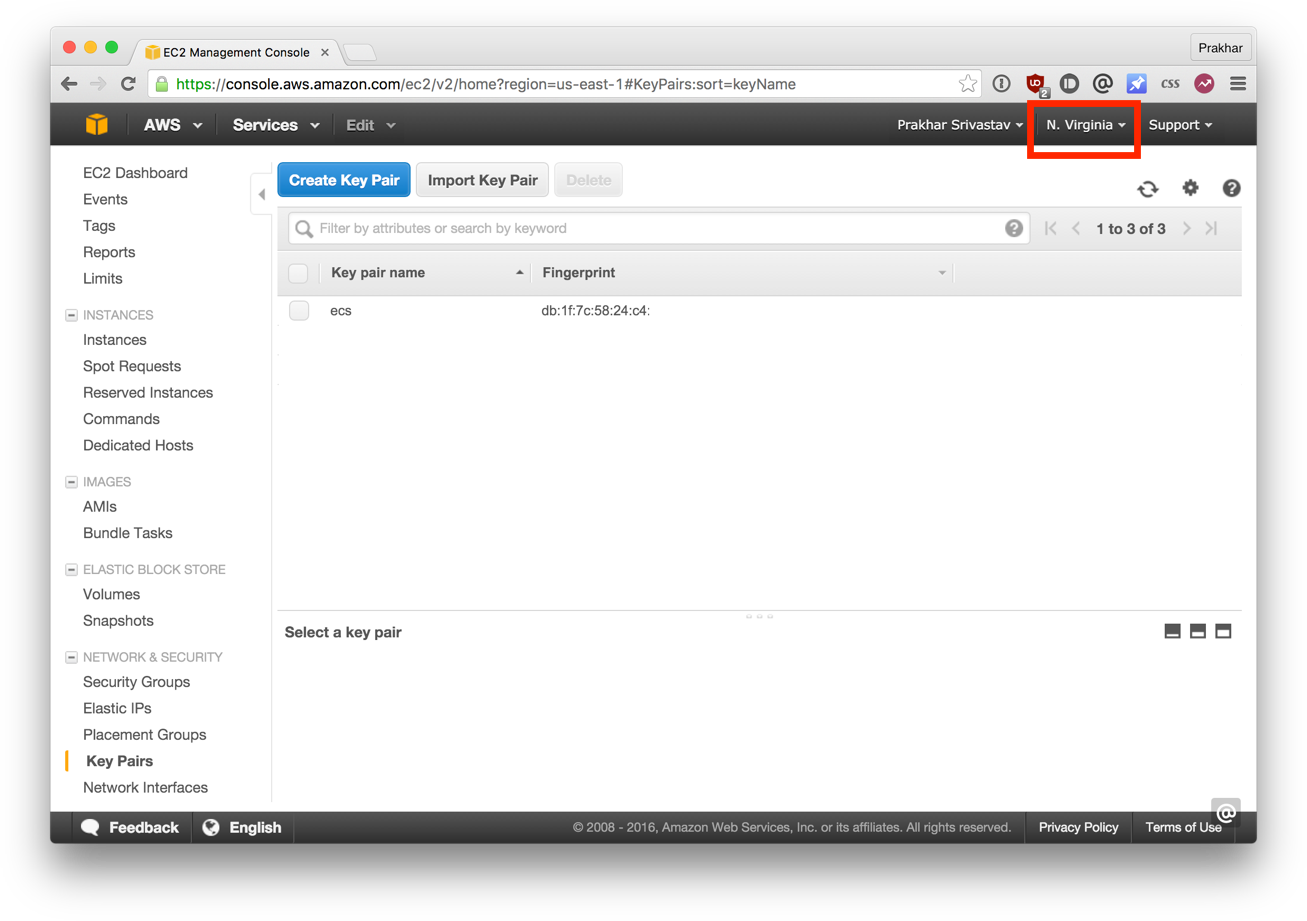

configure command with the region name we want our cluster to reside in and a cluster name. Make sure you provide the same region name that you used when creating the keypair. If you've not configured the AWS CLI on your computer before, you can use the official guide, which explains everything in great detail on how to get everything going.$ ecs-cli up --keypair ecs --capability-iam --size 2 --instance-type t2.micro

INFO[0000] Created cluster cluster=foodtrucks

INFO[0001] Waiting for your cluster resources to be created

INFO[0001] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0061] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0122] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0182] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

INFO[0242] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS

ecs in my case), the number of instances that we want to use (--size) and the type of instances that we want the containers to run on. The --capability-iam flag tells the CLI that we acknowledge that this command may create IAM resources.docker-compose.yml file. We'll need to make a tiny change, so instead of modifying the original, let's make a copy of it and call it aws-compose.yml. The contents of this file(after making the changes) look like (below) -es:

image: elasticsearch

cpu_shares: 100

mem_limit: 262144000

web:

image: prakhar1989/foodtrucks-web

cpu_shares: 100

mem_limit: 262144000

ports:

- "80:5000"

links:

- es

docker-compose.yml are of providing the mem_limit and cpu_shares values for each container. We also got rid of the version and the services key, since AWS doesn't yet support version 2 of Compose file format. Since our apps will run on t2.micro instances, we allocate 250mb of memory. Another thing we need to do before we move onto the next step is to publish our image on Docker Hub. As of this writing, ecs-cli does not support the build command - which is supported perfectly by Docker Compose.$ docker push prakhar1989/foodtrucks-web

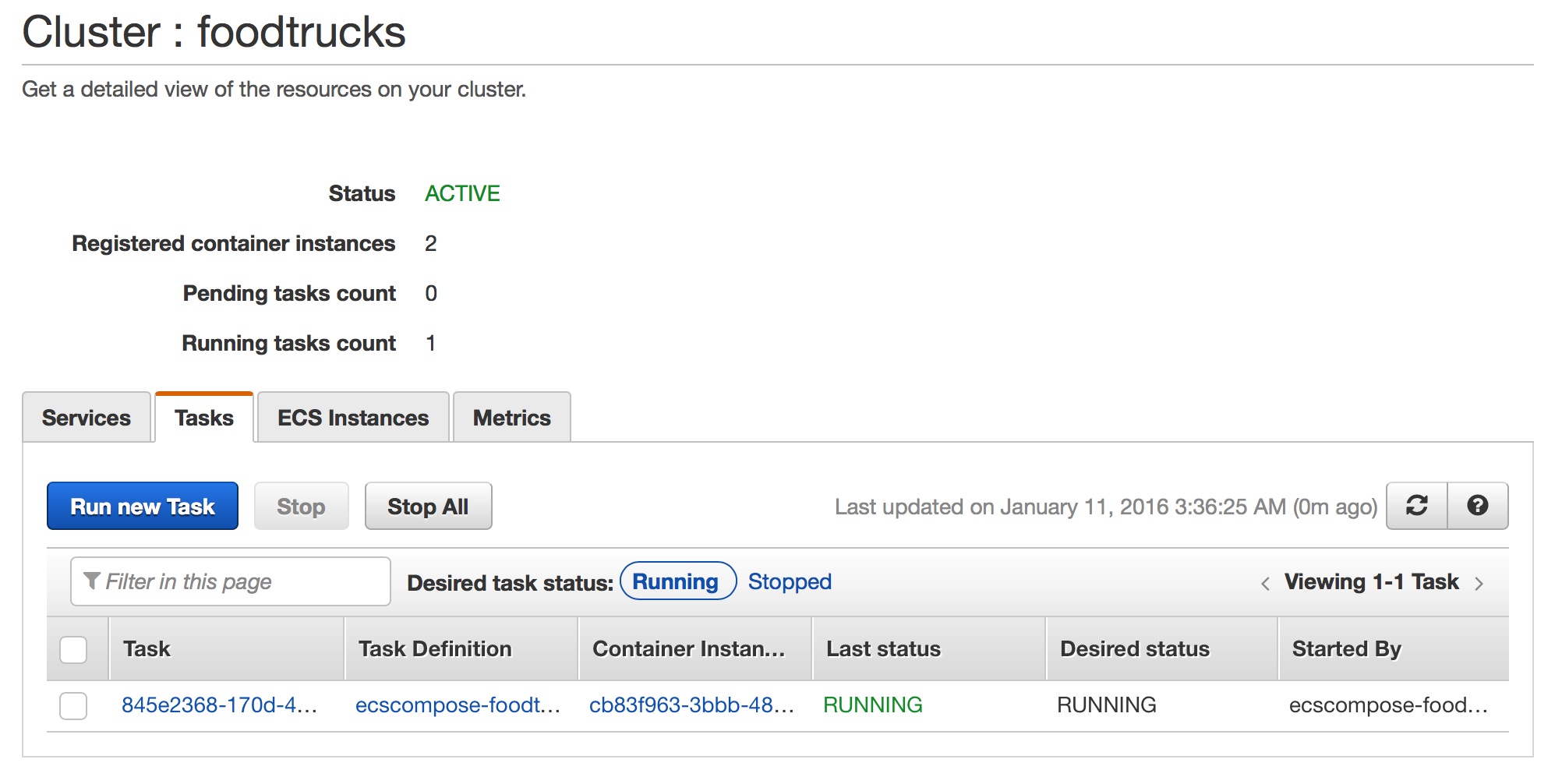

$ ecs-cli compose --file aws-compose.yml up

INFO[0000] Using ECS task definition TaskDefinition=ecscompose-foodtrucks:2

INFO[0000] Starting container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es

INFO[0000] Starting container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web

INFO[0000] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0000] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0036] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0048] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0048] Describe ECS container status container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=PENDING taskDefinition=ecscompose-foodtrucks:2

INFO[0060] Started container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/web desiredStatus=RUNNING lastStatus=RUNNING taskDefinition=ecscompose-foodtrucks:2

INFO[0060] Started container... container=845e2368-170d-44a7-bf9f-84c7fcd9ae29/es desiredStatus=RUNNING lastStatus=RUNNING taskDefinition=ecscompose-foodtrucks:2

--file argument is used to override the default file (docker-compose.yml) that the CLI will read. If everything went well, you should see a desiredStatus=RUNNING lastStatus=RUNNING as the last line.ecs-cli ps

Name State Ports TaskDefinition

845e2368-170d-44a7-bf9f-84c7fcd9ae29/web RUNNING 54.86.14.14:80->5000/tcp ecscompose-foodtrucks:2

845e2368-170d-44a7-bf9f-84c7fcd9ae29/es RUNNING ecscompose-foodtrucks:2